This week Meta open-sourced and hosted their SAM - Segment Anything Model , along with data sets that were used to train the model. The interactive web UI can be found here .

As seen in the image, this model can segment any picture into relevant objects. It is also interactive, so human in the loop can add or remove points from the object.

SAM is based on a foundation models. Foundation models - models pre-trained on very large datasets, often by self-supervised learning and can be used for a range of problems.

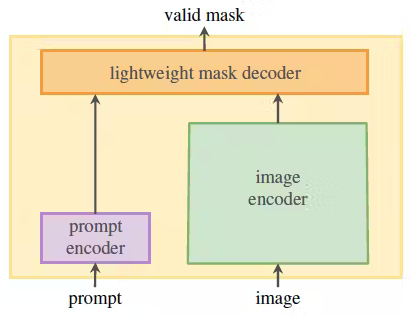

SAM’s model architecture consists of 3 elements: image encoder, prompt encoder and a lightweight mask decoder. The last one takes image and prompt embeddings and generates the output.

The data engine consists of 3 stages, starting from manual, where team of annotators help to find correct masks. It follows by semi-automatic and fully automatic stage.

SAM has been released as open source for research purposes and it is a game changer for AI-assisted labelling and can potentially be used to train even more powerful models.

This article reflects my personal views and opinions only, which may be different from the companies and employers that I am associated with.